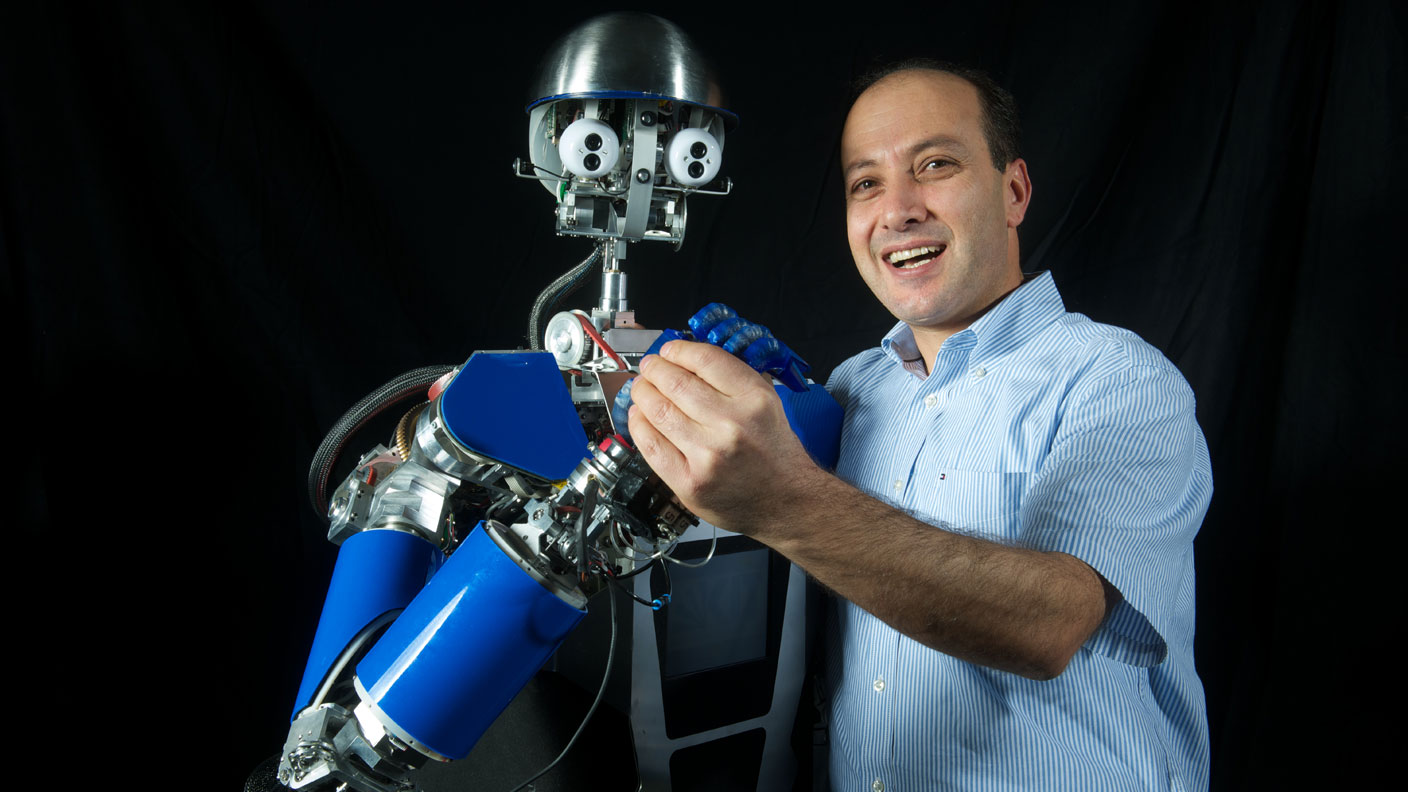

Title: Human-Robot Collaborative Manipulation in Real World Scenarios

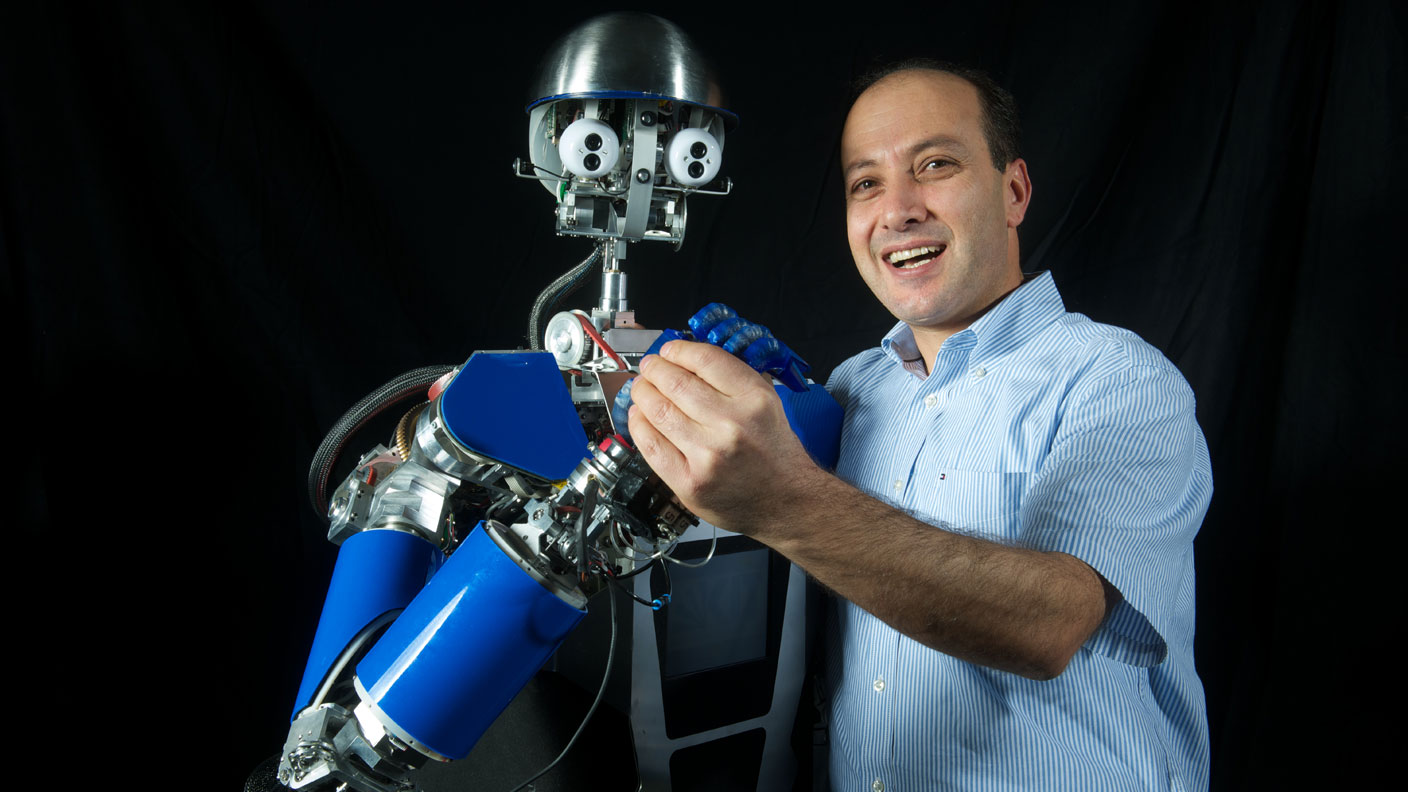

Robot that should literally provide a second pair of hands to humans should be able to understand situations and reason about possible helping actions.

The talk will address the grasping and manipulation skills of ARMAR-6, a new humanoid robot developed to provide help to technician in industrial maintenance tasks.

I will showcase the robot’s capabilities and its performance in a challenging industrial maintenance scenario that requires human-robot collaboration, where the robot autonomously

recognizes the human’s need of help and provides such help in a proactive way. The talk will conclude with discussion of challenges, lessons learnt and potential transfer of the results

to other domains.

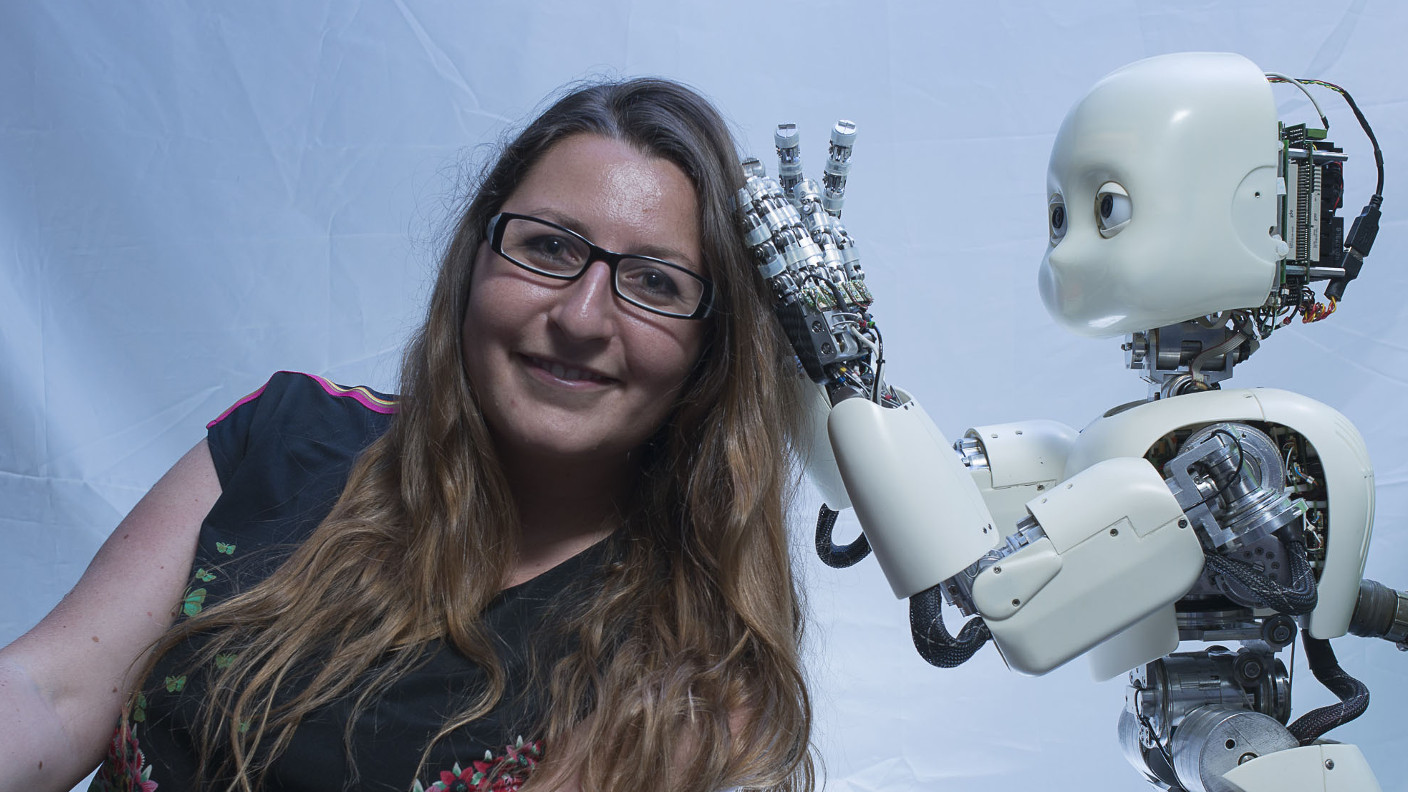

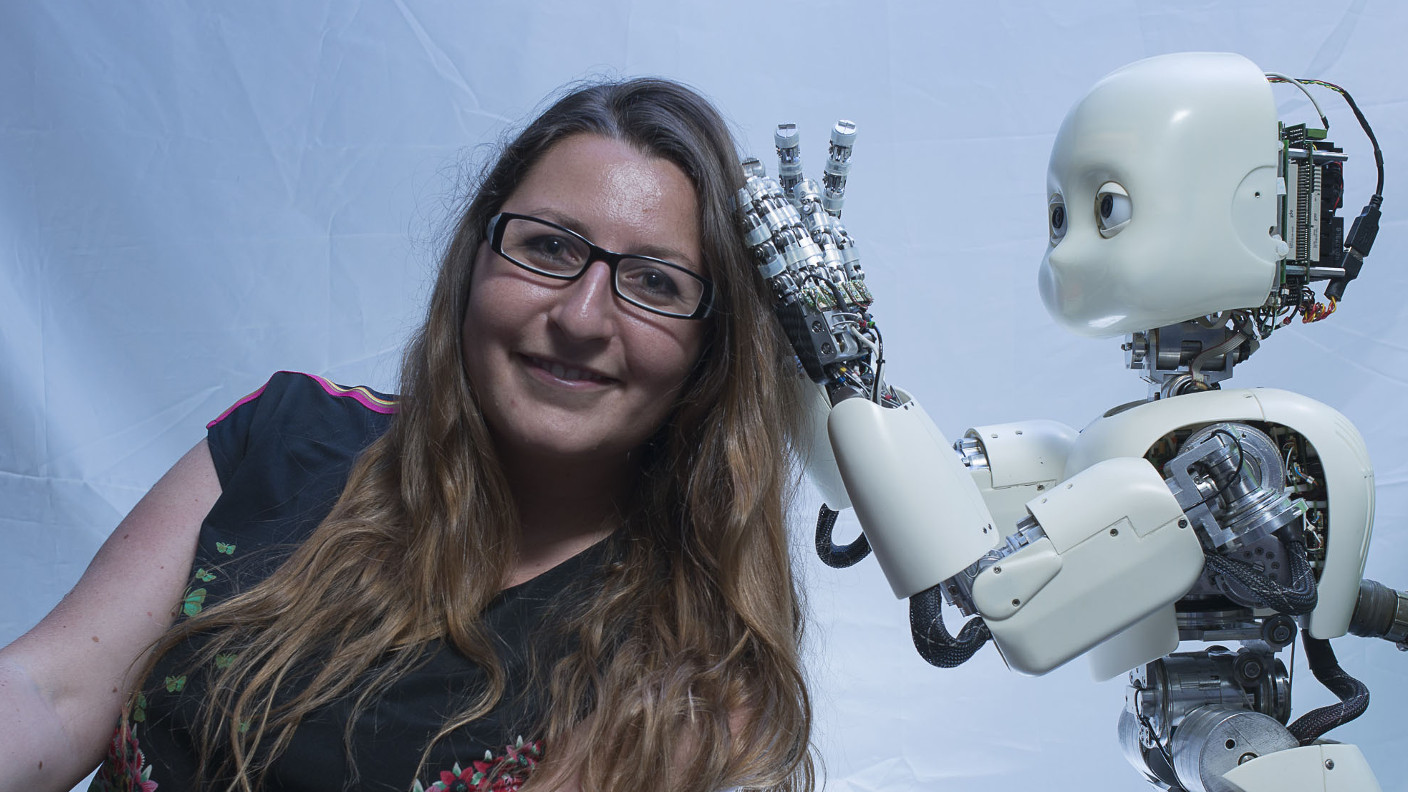

Title: Learning objects by autonomous exploration and human interaction

In this talk I will present how we approached the problem of learning the visual appearance of different objects with the iCub robot.

We exploited human interaction to teach the robot the visual appearance of objects, combing intrinsically motivated self exploration of

objects with the supervised interaction by human experts. We used human experts also to teach the iCub how to assemble a two-parts objects.

I will conclude by presenting how these researches are used in the new project HEAP, where the human expertise is used to facilitate the robot

to grasp irregular objects.

Title: Open-ended robot learning about objects and activities

If robots are to adapt to new users, news tasks and new environments, they will need to conduct a long-term learning process to gradually acquire the knowledge

needed for that adaptation. One of the key features of this learning process is that it is open-ended. The Intelligent Robotics and Systems group of the University

of Aveiro has been carrying out research on open-ended learning in robotics for more than a decade. Different learning techniques were developed for object

ecognition, grasping and task planning. These techniques build upon well established machine learning techniques, ranging from instance-based learning and bayesian

learning to abstraction and deductive generalization. Our approach includes the human user as mediator in the learning process. Key features of open-ended learning

will be discussed. New experimental protocols and metrics were designed for open-ended learning and will also be presented.

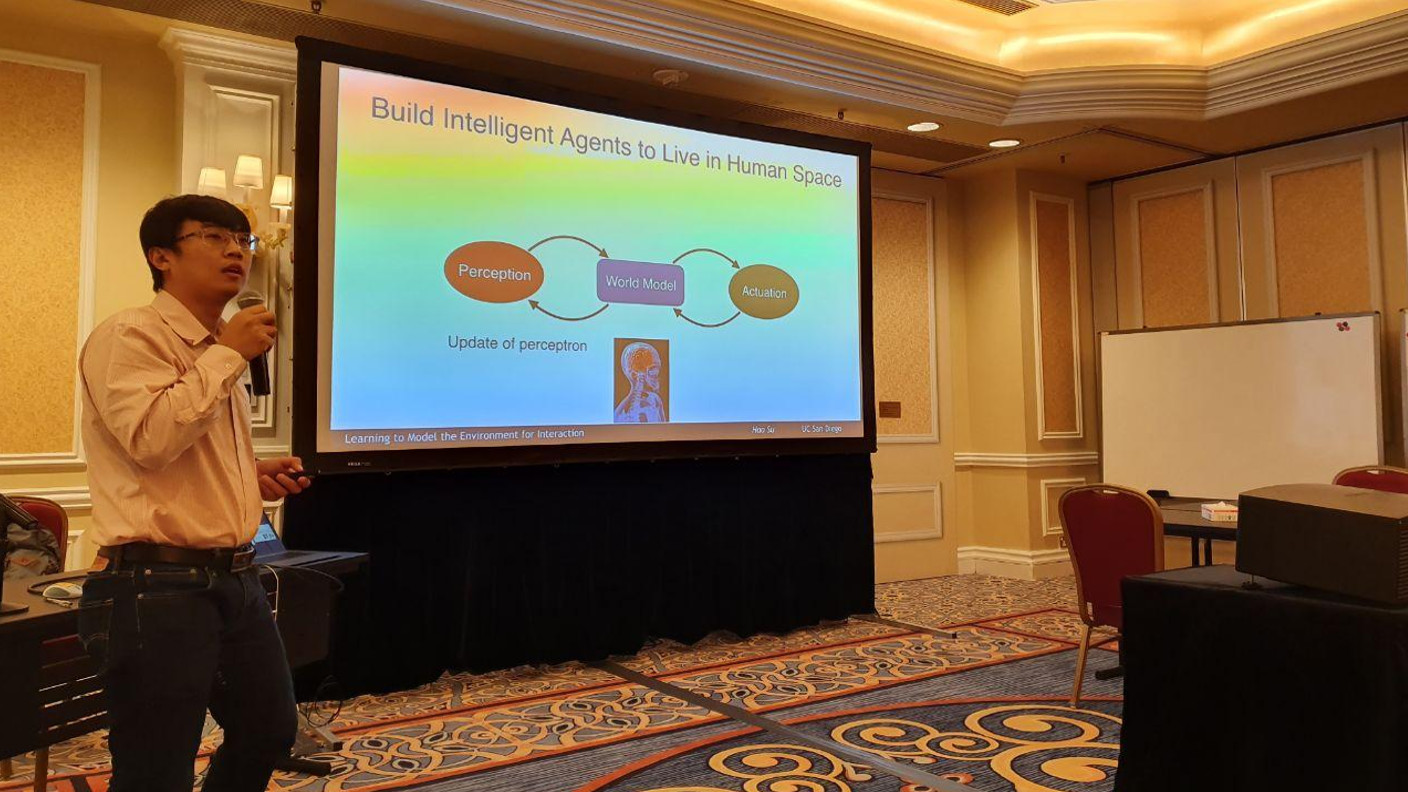

Title: Learning to Model the Environment for Interaction

Having a compact yet informative representation of the environment is vital for learning interaction policies in complex scenes.

In this talk, I will go over three recent papers from my group targeting at bridging gap of environment modeling and interaction:

1) A recent CoRL 2019 paper that studies how to learn 6-DoF grasping poses in a cluttered scene captured using a commodity depth sensor

from just a single view point; 2) A recent NeurIPS 2019 work on model-based reinforcement learning by mapping the state space using automatically discovered

landmarks; and 3) A recent CVPR 2019 paper on building a large-scale 3D dataset with fine-grained part segmentation and mobility information.

Title: Predictive Coding as a Computational Theory for Open-Ended Cognitive Development

My talk presents computational models for robots to acquire cognitive abilities as human infants do.

A theoretical framework called predictive coding suggests that the human brain works as a predictive machine, that is, the brain

tries to minimize prediction errors by updating the internal model and/or by affecting the environment. Inspired by the theory, I have suggested that

predictive learning of sensorimotor signals leads to open-ended cognitive development (Nagai, PhilTransB 2019). Neural networks based on predictive

coding have enabled our robots to learn to generate goal-directed actions, estimate the goal of other agents, and assist others trying to achieve a goal

successively. This result demonstrates how both non-social and social behaviors emerge based on a shared mechanism of predictive learning.

I discuss the potential of the predictive coding theory to account for the underlying mechanism of open-ended cognitive development.

Title: It kinda works! Challenges and Opportunities for Robot Perception in the Deep Learning Era

Spatial perception has witnessed an unprecedented progress in the last decade. Robots are now able to

detect objects, localize them, and create large-scale maps of an unknown environment, which are

crucial capabilities for navigation and manipulation. Despite these advances, both researchers and

practitioners are well aware of the brittleness of current perception systems, and a large gap still

separates robot and human perception. This talk discusses two efforts targeted at bridging this gap. The

first focuses on robustness: I present recent advances in the design of certifiably robust spatial

perception algorithms that are robust to extreme amounts of outliers and afford performance

guarantees. These algorithms are “hard to break” and are able to work in regimes where all related

techniques fail. The second effort targets metric-semantic understanding. While humans are able to

quickly grasp both geometric and semantic aspects of a scene, high-level scene understanding remains a

challenge for robotics. I present recent work on real-time metric-semantic understanding, which

combines robust estimation with deep learning. I discuss these efforts and their applications to a variety

of perception problems, including mesh registration, image-based object localization, and SLAM.

Title: Challenges of Self-Supervision via Interaction in Robotics

Deep Learning has been revolutionizing robotics over the last few years. Leveraging data to learn robotic skills is critical to gain generalization capabilities for certain

robotic tasks, such as generalizing to grasp new objects. We will discuss challenges we encountered when learning on real robots, and the progress we've made in tackling them.

Learning robotic tasks in the real world require our algorithms to be data efficient, or to scale our data collection efforts. Real world tasks have much more diversity and visual

complexity than in most simulated domains. Some challenges are sometimes not captured in existing simulated domains, such as dealing with latency caused by sensors and real-time computations

that may impact the performance of certain reinforcement learning algorithms. In this talk, we will discuss how we can learn complex closed-loop self-supervised grasping behaviors, using deep

reinforcement learning, on real robots. We will discuss how we can leverage sim-to-real techniques to gain orders of magnitude data efficiency as well as new reinforcement learning algorithms

that can scale to visual robotic tasks and generalize to new objects. We will then take a peak at what types of challenges lie ahead of us, beyond grasping objects within a bin.

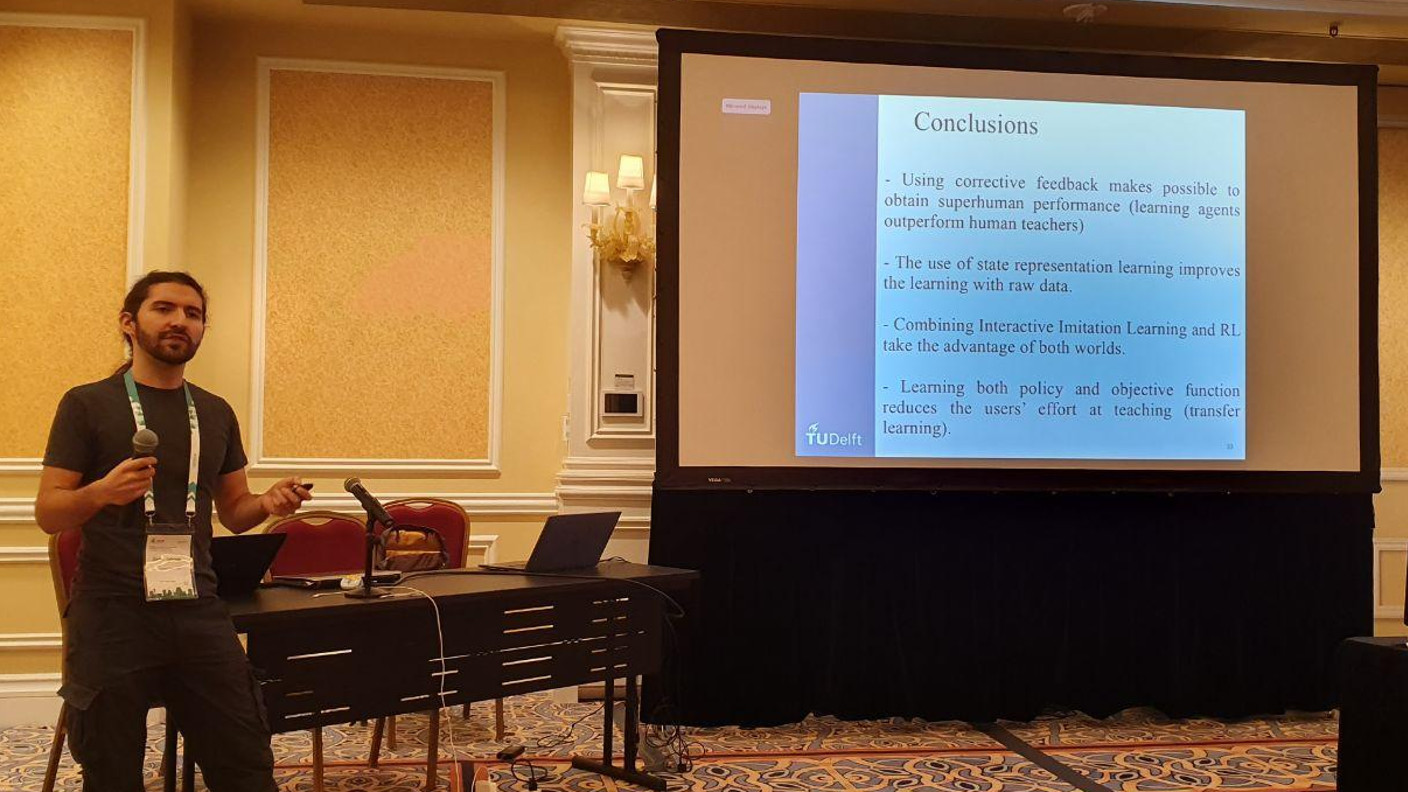

Title: Teaching Robots Interactively from few corrections: Learning Policies and Objectives

Learning manipulation and other complex robotic tasks directly on the real systems seems to be a simplified and straight strategy.

Although, for challenging tasks it remains unfeasible due to the need for prohibitive amounts of physical experience, in the autonomous

(reinforcement) learning cases, and to the lack of any source of proper expert demonstrations in the case of Imitation Learning.

Methods demanding less detailed information from the user, and more robust to mistaken feedback are required from end-users who are non-experts,

but still need flexible and adaptable robots. This talk is focused on learning methods that allow non-expert users to train robots to perform complex

tasks with few and vague interactions. Based on the use of occasional relative corrections, and users' preferences, it is possible to learn policies

and the objective functions of the task the user wants to teach. All of this, within a few iterations, ensuring data efficiency, therefore, making it

feasible for real systems.