We will now model an instance of CIWLI “with reference”, and solve it using the trusted third person solution as mentioned in [FNW96], using Kripke models and action models, as described in [BAL99].

First, we give an informal description of the simplified protocol, then we mention the assumptions that we set for our model, we proceed then to derive from these assumptions the logic structure and axioms that apply to our models, and how these axioms in turn put certain restrictions on the models. We close by giving example models and action structures for the CIWLI protocol.

Assume that we have three agents: A, B, and C. Agents A and B want to know if their beliefs are compatible. In our simplistic model, we limit ourselves to considering a single proposition p, which the agents may either believe (they “know” p is true), or they may not believe it (they “know” p is not true).

For example, let p be the proposition that “Google will go bankrupt within 5 years”. Now agent A may believe p to be true, while agent B may believe p to be false. And the agents wish to find out if they have the same beliefs regarding proposition p, but without leaking any information to the other agent (for instance a “walk away” situation, where A finds out about B's belief, but does not share her own belief with B).

This is why agents A and B turn to a trusted third party C. A and B tell C their belief regarding p, and C will tell A and B if their beliefs were compatible or not.

Now we first make a few assumptions. In the following we use the terms “knowing” and “believing” interchangeably, since in our models these concepts mean the same. Our Kripke models capture beliefs, not knowledge. The most important consequence of this is that agents may believe things that are not true, i.e. the real state of p does not matter in our models, only what A and B believe regarding p.

We furthermore assume that all agents play fair, i.e. they do not lie, or try to cheat. They all know the CIWLI protocol and agree to participate in it.

As preconditions, we have that both A and B know which proposition they will be comparing their beliefs about, and they know what they believe themselves about p, but they do not know what the other agent believes about p. So we are effectively modeling an instance of CIWLI “with reference”, where the reference is proposition p, and the “secrets” that the agents wish to compare is their beliefs regarding the “truth value” of p.

Furthermore, we have that A and B either believe in p, or that they believe in ~p, but they do not consider both options as a possibility, i.e. they are not “unsure” about the “truth value” of p. The reasoning behind this design decision is that if the agents were unsure about what to think of p, then the usefulness and meaningfulness of the comparison of C would be debatable, as would the protocol itself.

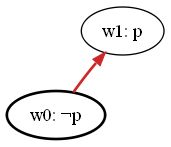

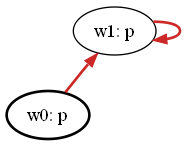

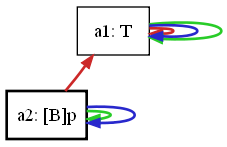

Our Kripke models are directed models, i.e. they have a single “real” state that functions as a start state for the beliefs (denoted as an ellipse with a thickened line in our images). For example, consider an arrow for agent A from the start state to a state where p holds; this should be read as saying “agent A believes that p holds”, this situation is illustrated in the image below (in this model, the red arrow denotes the belief of agent A).

|

| Figure a |

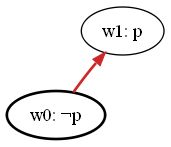

Another example: two subsequent arrows, the first going from the start state to a state w1, the second going from w1 to a state w2, where ~p holds; the first one labeled as A, and the second one labeled as B. This should be read as saying “A believes that B believes that p does not hold”, see the image below (the lightblue arrow denotes the belief of agent B in this model).

|

| Figure b |

Of course in theory, we could make these models infinitely large (A believes that B believes that A believes that...). However, for CIWLI, only two layers of relations are needed: we have the first layer denoting what each agent believes in, and the second layer denoting what an agent thinks that the other agent believes. These two layers are enough to model our problem, because CIWLI is really about finding out what the other thinks; things such as finding out what the other thinks that we think, may be interesting, but irrelevant for the problem of CIWLI.

At the start of the protocol, we assume that the beliefs of A and B regarding p are fixed and do not change during the execution of the protocol. What does change is what they believe about what the other agent believes: at the start we have that both agents A and B consider two possibilities: a scenario where the other agent believes p and a scenario where the other agent believes ~p; then after executing the protocol, the agents will only consider one of these scenarios (the one corresponding to what the other agent actually believes).

As said, in our model, we will try to avoid constructions such as “A knows that A knows that...” as much as possible, since for our problem, these are superfluous. Constructions of the form “A knows that B knows that...” are very useful however, since they convey the information that we are interested in.

In this section, we will go over the most common axioms used in Kripke models, and decide if they should hold in our models or not, and what the implications are of these axioms to our models.

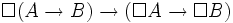

First off, we have the K axiom (images in this section are courtesy of Wikipedia):  . We conclude that this option should hold for our Kripke models; this result is trivial however, since in CIWLI we do not use any direct implications, and allowing K has no impact on the structure of the models.

. We conclude that this option should hold for our Kripke models; this result is trivial however, since in CIWLI we do not use any direct implications, and allowing K has no impact on the structure of the models.

Secondly there is the T axiom:  . This is of course not true, since we established that, for example, A might believe that p, while B might not believe p. This would imply that in the start state, both p and ~p should be true. This can of course not be the case, so axiom T does not apply to our models. We reiterate ourselves by saying that the actual state of p in the models that we show later is really irrelevant, and is only included for syntactical completeness: the state of p in the real world does not have a real meaning or impact on our models. The effect on our models is that our models should not necessarily exhibit reflexive behavior, i.e. it is not required that all agents have a reflexive arrow in each state (reflexive arrows are not prohibited of course, so they may still occur somewhere).

. This is of course not true, since we established that, for example, A might believe that p, while B might not believe p. This would imply that in the start state, both p and ~p should be true. This can of course not be the case, so axiom T does not apply to our models. We reiterate ourselves by saying that the actual state of p in the models that we show later is really irrelevant, and is only included for syntactical completeness: the state of p in the real world does not have a real meaning or impact on our models. The effect on our models is that our models should not necessarily exhibit reflexive behavior, i.e. it is not required that all agents have a reflexive arrow in each state (reflexive arrows are not prohibited of course, so they may still occur somewhere).

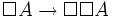

The 4 axiom says that  , which would enforce the transitive property on our models. We note that we have no statements of the kind “A knows that A knows that...” that we wish to express in our models, but if we had them, we would not wish to make a distinction between “A knows that A knows that A” and “A knows that A”, since in our models that would express the same notion.

, which would enforce the transitive property on our models. We note that we have no statements of the kind “A knows that A knows that...” that we wish to express in our models, but if we had them, we would not wish to make a distinction between “A knows that A knows that A” and “A knows that A”, since in our models that would express the same notion.

|

| Figure c |

The D axiom states that  . In our context this would mean that if A knows p, then there must be a world where p holds. We decide that this property should indeed hold for our models, because we want that if A knows p, it should consider at least one world where p holds, because we decided that the knowledge our agents A and B have regarding p should be derivable from the model. If the D axiom would not apply to our models, then we could derive any knowledge from our models, which is certainly not the intention, thus, we conclude that axiom D should hold for our models. This axiom implies seriality on our models, so we must make sure that every state in our models has at least one outgoing arrow for every agent.

. In our context this would mean that if A knows p, then there must be a world where p holds. We decide that this property should indeed hold for our models, because we want that if A knows p, it should consider at least one world where p holds, because we decided that the knowledge our agents A and B have regarding p should be derivable from the model. If the D axiom would not apply to our models, then we could derive any knowledge from our models, which is certainly not the intention, thus, we conclude that axiom D should hold for our models. This axiom implies seriality on our models, so we must make sure that every state in our models has at least one outgoing arrow for every agent.

We furthermore consider the B axiom:  . This axiom says that if p holds, then the agents would know that there exists a state where p holds, this property would enforce the symmetric property on our models. While this axiom may hold for some of the states in our models, it should certainly not hold for the start state, since if an agent were to have an arrow back to the start state, he would know what the other agents were thinking, and this is not what we want in our models. So we conclude that the B axiom should not apply to our models, and that our models should not necessarily be symmetric.

. This axiom says that if p holds, then the agents would know that there exists a state where p holds, this property would enforce the symmetric property on our models. While this axiom may hold for some of the states in our models, it should certainly not hold for the start state, since if an agent were to have an arrow back to the start state, he would know what the other agents were thinking, and this is not what we want in our models. So we conclude that the B axiom should not apply to our models, and that our models should not necessarily be symmetric.

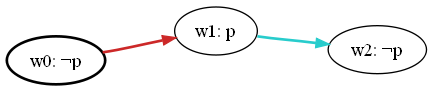

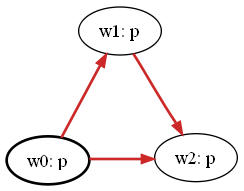

The last axiom we consider is the 5 axiom, stating that:  . In our context, this would mean that if p is a possibility of agent A, then A would know that this is a possibility, see the image below.

. In our context, this would mean that if p is a possibility of agent A, then A would know that this is a possibility, see the image below.

|

| Figure d |

While this is a property that in reality may very well hold for our models, for the sake of simplicity, we chose to restrict our models to knowledge of the first level, that is, we only consider facts of the form “A knows p” and “A knows that B knows that p”, and we omit reasoning about statements such as “A knows that A knows that p”, since that expresses facts on a deeper level of reasoning that we are not interested in. We conclude that our models should not abide by axiom 5, so our models should not necessarily reflect Euclidean behavior.

We conclude this section by summarizing our results regarding the axioms that should apply to our models:

| name | should hold | impact on models |

|---|---|---|

| K | Yes | None. |

| T | No | None: models are not necessarily reflexive, but reflexive relations may still occur (arrows having the same state as start- and endstate). |

| 4 | Yes | None: models should reflect the transitive property, however this axiom has no real impact on our models since such relations do not occur anywhere. |

| D | Yes | Models should reflect the serial property: all states should have at least one outgoing arrow for all agents. |

| B | No | None: models are not necessarily symmetric, but symmetric behavior may still occur (bi-directional arrows). |

| 5 | No | None: models are not necessarily Euclidean, but Euclidean relations may still occur (to be more specific, only those type of Euclidean relations where two or three of the three states in the formula are the same state). |

We conclude that our logic is of the form KD4, and that our models should be transitive and serial.

We will now model an instance of CIWLI where A and B both believe p, and in the real world (the start state), p is also true. (Although we do not use this fact, as the value of p in the start state (the real value of p), is irrelevant for our model of CIWLI. We have to include it however for our models to be valid KD45 models.)

In this section, we use the notation K_A p to denote that “agent A knows p”, or “agent A believes p”, corresponding to the box-with-subscript notation that is used in [BAL99]. We also use terms such as “the beliefs of agent C” or “the branches of agent C” to denote the branches starting from the start state with an arrow labeled C.

We model this instance of CIWLI in four steps.

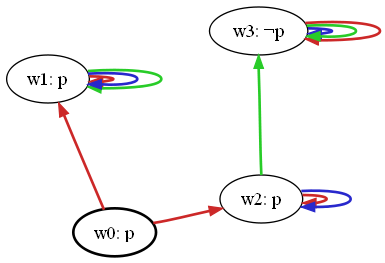

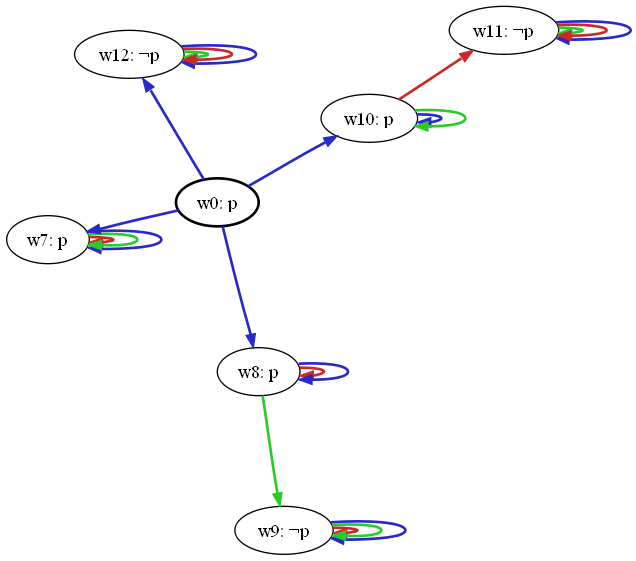

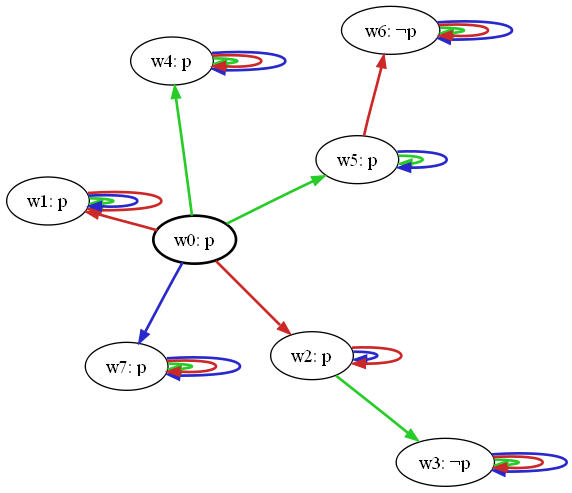

Our first model will be a Kripke model of the state before the protocol begins; we will build this model in steps below. Please note that the red arrows indicate beliefs of agent A, the green arrows those of agent B and the blue arrows belong to agent C. The images in this section were generated by our implementation that automatically generates images from relations specified in XML.

|

| Figure 1 |

|

| Figure 2 |

Here we make two separate paths from the start state to denote that A either believes that B believes p, or A believes that B believes that ~p, but A does not believe that B is unsure about p (that B would consider both p and ~p possible).

Note that in the branch where “A believes that B believes that ~p”, by our seriality axiom, we had to to add an outgoing arrow representing what A believes that B believes that A believes. Since this arrow is purely there for syntactical reasons, and we do not use reasoning of this level in the analysis of the Kripke model, we arbitrarily chose that A believes that B believes that A believes ~p, and omitted the scenario where A believes that B believes that A believes p. Such simplifying omissions that do not influence our reasoning regarding the CIWLI protocol are also made in the other images in this section, often without mentioning it. The convention we maintain with these is that meaningless arrows are always reflexive arrows.

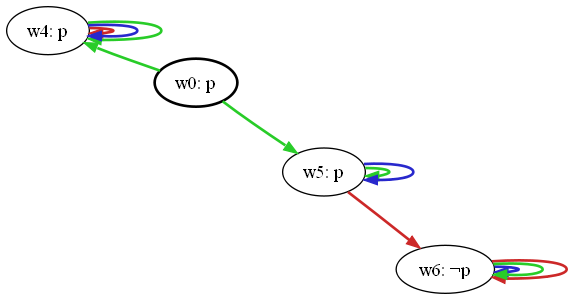

|

| Figure 3 |

|

| Figure 4 |

|

| Figure 5 |

|

| Figure 6 |

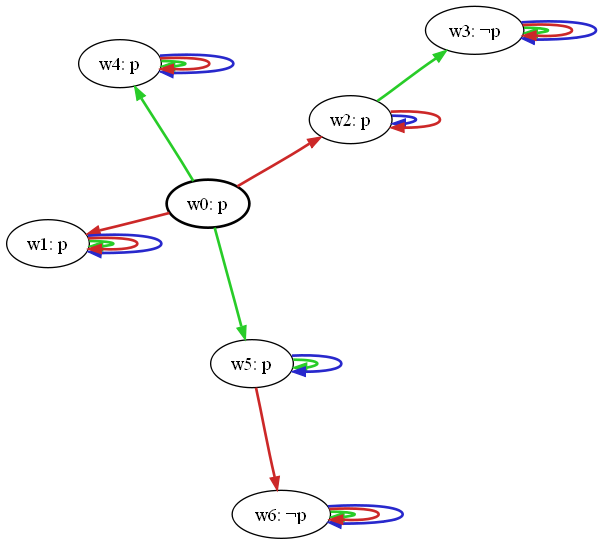

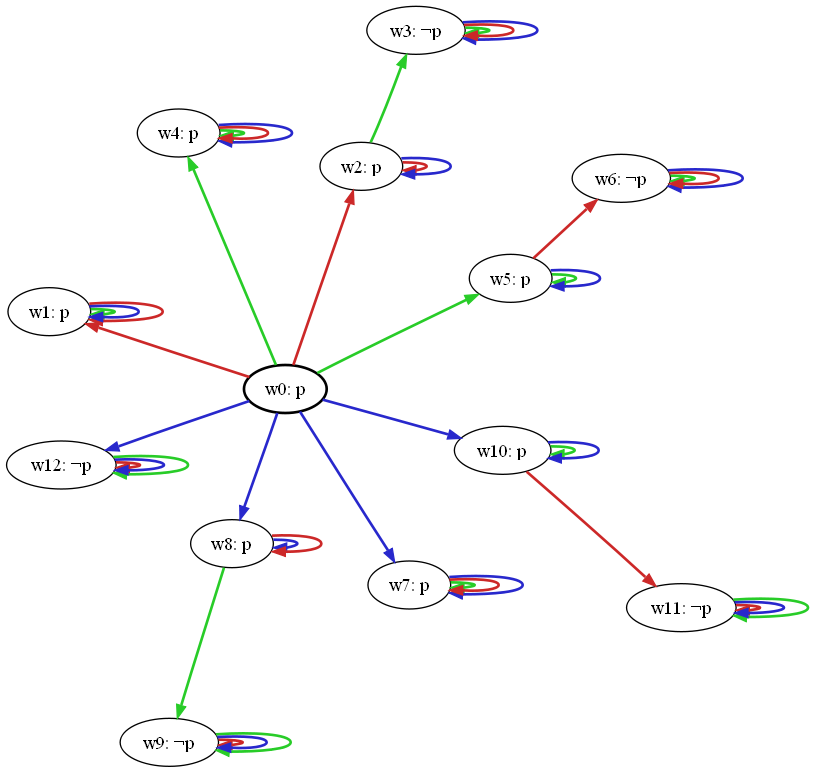

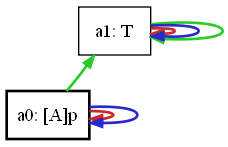

Now the second step in the CIWLI protocol is that A tells C his belief regarding p.

We model this using an action model where the action is “agent C learns K_A p”. Agent B may not follow this conversation so although his beliefs regarding what agent C knows about what agent A believes have changed, we do not model this type of knowledge in our models, and so the knowledge of agent B in our model will not change, hence agent B thinks that nothing is going on.

|

| Figure 7 |

The image displays the type of action that is named a “secure group announcement with no suspicion” in [BAL99], meaning that a group of agents (two in our case) share some knowledge while outsiders (agent C in our case) are oblivious to this. Here, a0 is the actual action that is taking place, namely “agent C learns K_A p” (read: “agent C learns that agent A believes that p”). This action has as precondition that K_A p holds. Action a1 is what happened according to agent B, for as much as we are concerned: “nothing happened”, and this action has T (true) as precondition.

Applying this action model to our Kripke model of Figure 6 of course changes our model. Note that only the knowledge of agent C has changed (agent A already knew that agent A knows that p; moreover, this knowledge could already be derived from the Kripke model), so we only need to adjust the branches that start from the start state with an arrow from agent C, since in our model, we are not interested in the knowledge that the other agents think that C believes, and so we do not update the other branches.

Following the rules of actions and updates to Kripke models, we should maintain only those states where C believes that K_A p, or: K_C K_A p. This results to deleting two branches from the image of Figure 7:

|

| Figure 8 |

The third step is that B tells C his belief regarding p, this is done similarly to step 2.

Action model where “agent C learns K_B p”:

|

| Figure 9 |

Analogously to the previous step, in applying this action, we note that only the direct beliefs of agents B and C might have changed, since according to agent A, “nothing happened”. Agent B already knew that agent B believes p, so that the only branches we have to change is those of agent C. Of the two C-branches left in our Kripke model, only one is compatible with “K_C K_B p”, so we delete the other branch:

|

| Figure 10 |

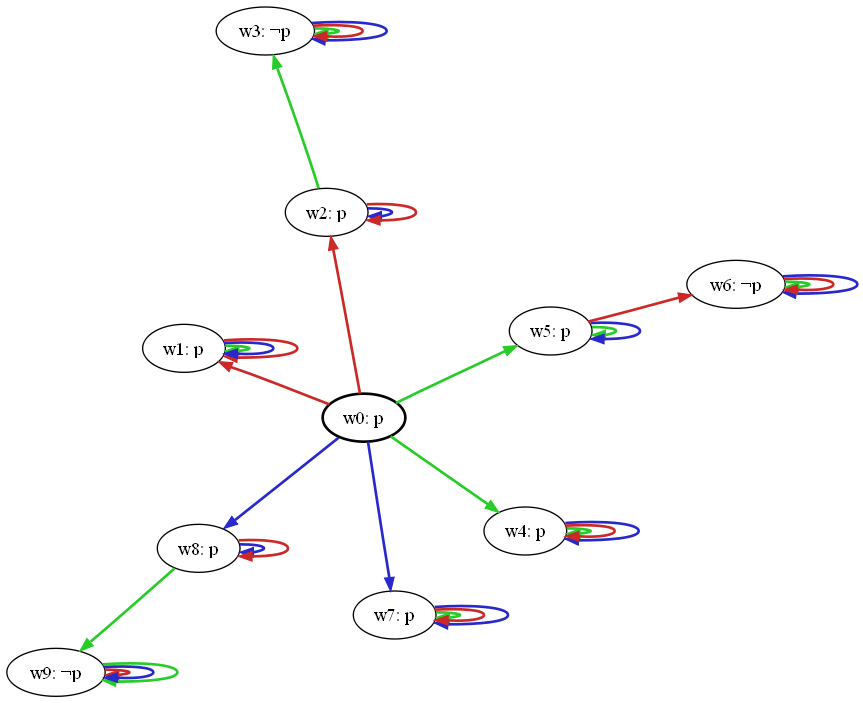

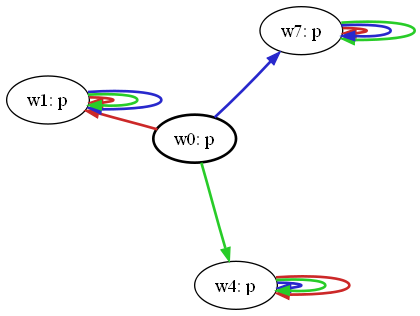

The fourth step is C telling agents A and B if their beliefs regarding p are equivalent or not, which in this case, they are.

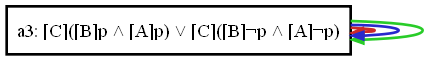

We model this by an action model of agent C announcing that the beliefs are equivalent:

|

| Figure 11 |

In our case, this means that we have an action a3, that has as precondition that agent C knows that agents A and B have equivalent beliefs regarding p. Now in the action model we see that there are arrows from a3 to a3, this means that if we apply the action to the Kripke model, we should retain only branches where it holds that agent A (or B) believes that agent C believes that both K_A p and K_B p. Since we added reflexive arrows for C in the branches of A and B, one can easily see that this indeed holds:

|

| Figure 12 |

We note that the other possible scenarios, e.g. where one of the agents (or both agents) believe ~p, can be modeled in a similar way, only minor changes would be required: the labels of some states (p or ~p) would have to be changed, which would of course cause different branches to be cut from Kripke model in steps 2-4.

We conclude by mentioning that it can be verified that starting from our original Kripke model in Figure 6, the other Kripke models can be fully automatically generated by using the action models described and applying Baltag's algorithm to update Kripke models:

In the first action, 2 branches corresponding to beliefs from C about what A believes are cut from the original model. The reasoning here is that only those nodes that satisfy the precondition K_A p remain;

In the second action, we use the resulting model after the first action, and cut one additional branch. The reasoning here is that only those nodes that satisfy the precondition K_B p remain;

In the third action, we use the resulting model after the second action, and cut two additional branches (corresponding to the incorrect beliefs of agent A and agent B). One can verify that in the resulting branches, the precondition of action a3 indeed holds: for the remaining belief of A it can be derived that K_A K_C (K_B p & K_A p), and for the remaining belief of B it can be derived that K_B K_C (K_B p & K_A p). Note that this is possible because of our convention to represent “stub”-information as reflexive arrows.