Esprit Project 5204: Papyrus

(Pen and Paper Input Recognition Using Script)

1991-1993

|

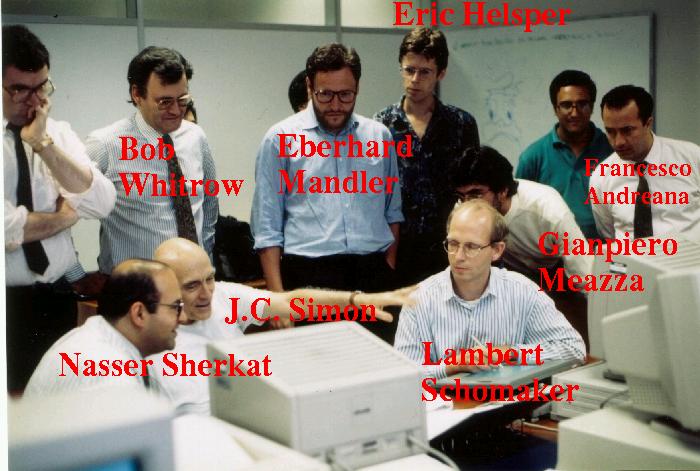

| Esprit Papyrus Review, Pozzuoli, september 1992: Nasser Sherkat (NTU),

Bob Whitrow (NTU), Jean-Claude Simon (reviewer), Eberhard Mandler (reviewer),

Eric Helsper (NICI), Lambert Schomaker (NICI), Gianpiero Meazza (Olivetti),

Francesco Andreana (Olivetti). Picture taken by Hans-Leo Teulings (NICI).

Not visible: Pietro Morasso (UGDIST). At the review, three on-line recognizers

of isolated words (NTU,UGDIST,NICI), were tested together and in isolation.

Nasser wrote a Borda-count rank combiner. Timothy Scanlan demonstrated a

stand-alone recognizer by Digital (not visible). The NICI and UGDIST

recognizers were trainable. The tablet is a monochrome Wacom HD series

digitizer/LCD screen. The application was a Pen-Windows 3.1 program, sending

the on-line XY coordinates of a word to a 486 (sic: powerful!) running an Unix

version by Olivetti (IBISys). The recognition servers on Unix sent their word

lists to the combiner which returned the average-rank hit list back to the

Windows client. At the application level the goal was to illustrate that it

would ultimately be

possible to design pen-based computers in a hospital emergency-entrance context

(Jon Kennedy, CAPTEC). Therefore, the word lexicon consisted of about

7000 medical terms from the targeted hospital context (St. James's Hospital,

Dublin).

|

Some test results on single on-line recognizers for mixed and free-style

words, performed in the year 1992. The number of words in the test

set is 180 words. The lexicon was about 7k words. [The result tables for

the combined (Borda) results are lost in piles of dust, but its

beneficial effect was quite substantial.]

Top-1 recognition (% words correct)

| Writer | UGDIST | NOTPOLY | DIGITAL | NICI

| | a (Irl) | 60 | 68 | 21 | 69

| | b (Irl) | 49 | 38 | 18 | 60

| | c (Irl) | 55 | 29 | 16 | 55

| | d (Irl) | 61 | 39 | 27 | 50

| | e (Irl) | 51 | 22 | 18 | 38

| | f (I) | 81 | 93 | 73 | 83

| | g (I) | - | - | 51 | 77

| | h (I) | 73 | 10 | 30 | 70

| | i (I) | 67 | 4 | 8 | 58

|

|

Top-5 recognition (%words correct)

| Writer | UGDIST | NOTPOLY | DIGITAL | NICI

| | a (Irl) | 76 | 89 | 40 | 83

| | b (Irl) | 60 | 53 | 33 | 73

| | c (Irl) | 67 | 48 | 32 | 76

| | d (Irl) | 73 | 64 | 44 | 70

| | e (Irl) | 62 | 42 | 34 | 50

| | f (I) | 86 | 97 | 86 | 90

| | g (I) | - | - | 71 | 88

| | h (I) | 81 | 17 | 47 | 81

| | i (I) | 76 | 7 | 16 | 58

|

|

At the time, the conclusion was that writing styles are so

diverse that trainable recognizers (UGDIST, NICI) are at an advantage

in free-style handwriting recognition. For example,

the pre-training top-word performance on writer i was 3% for

the NICI recognizer. After training, which consisted of labeling

letters of 64 unrecognized words in the training set, a top-word recognition

of 58% (See table) was achieved for this difficult Italian writer on

the test set.

The static and rule-based systems (NOTPOLY, DIGITAL) performed

very well on the style they were designed for.

NOTPOLY (Bob Whitrow c.s.)

This was arguably the most mature system in this bunch. It started out as

a rule-based classifier for isolated handprint, and was subsequently

upgraded to handle connected cursive. Bob was grinding his teeth whenever

I forgot to cross the "t"s during the demo above. Strong point: it knew

a lot about neat English handwriting. Weak point: not trainable.

DIGITAL (Timothy Scanlan)

This recognizer was rule-based, as well. The essential classification

parameters and decisions where generated by a program which wrote

out hard-coded C. This made the classifier much more compact than any

of the other systems in the bunch. Strong point: efficient.

Weak point: not trainable.

UGDIST (Piero [Pietro] Morasso c.s.)

UGDIST had introduced the fabulous Kohonen self-organizing map to us at NICI.

The UGDIST classifier stored allographs in groups, which were determined

by the number of velocity-based strokes: Kohonen maps for 1-stroked,

2-stroked, etc. N-stroked character shapes, using Cartesian features.

Template matching was used.

Strong point: generalization was good, trainable. Weak point: slow,

distinction in several Kohonen maps was a little bit cumbersome.

NICI (Lambert Schomaker + Hans-Leo Teulings, c.s.)

Having learnt about Kohonen maps through Piero Morasso, we stuck

to using Kohonen maps for single velocity-based strokes, using mainly

angular features. The trick of transition-network coding was used to

jump from the stroke to the character level. Strong points:

normalization, fast on-line trainability. Weak point: brittleness

in the process of character search: noisy strokes between

characters would make the search go astray...

...We knew since 1990 that HMM's probably would be able to solve this latter

problem, but we did not spend efforts in this area, which was being explored

by Yann le Cun who introduced the hybrid HMM/convolutional TDNN.

Later, Stefan Manke made us regret our lazyness at the 1995 ICDAR,

with his very good HMM/convolutional TDNN recognizer (NPen++).

Both the UGDIST and NICI recognizers relied on equidistant time

sampling, in order to be able to compute velocities. With a DOS client,

this was no problem. Under Windows, it proved to be a hassle.

Live echoing of ink at 100 coordinates per second is not what a 25 MHz

486 running Windows 3.1 could handle easily. The NOTPOLY and DIGITAL

recognizers were less upset by variations in sampling rate.

The recognizer characteristics as of 22 sept 1992

| | NOTPOLY | DIGITAL | UGDIST | NICI

|

|---|

| Signal Processing

|

|---|

| sampling | spatial | spatial | temporal | temporal

|

| smoothing | X | X | X | X

|

| Normalizations (pre-feature calculation)

|

|---|

| slant | X | - | - | X

|

| rotation | X | - | - | X

|

| size | X | X | NA | X

|

| Feature processing

|

|---|

| strokes | X | X | X | X

|

| full characters | - | - | X | -

|

| angular features | X | X | X | X

|

| cartesian features | X | - | X | X

|

| structural features | X | - | X | X

|

| neural network | - | - | Kohonen/Fritzke | Kohonen

|

| User aspects

|

|---|

| training, off-line/batch | - | - | X | X

|

| training, interactive | - | - | - | X

|

| Writing Style

|

|---|

| hard-coded style assumptions | X | X | - | -

|

| connected cursive | X | X | X | X

|

| mixed cursive | X | X | X | -

|

| handprint | X | X | - | -

|

| Heuristics

|

|---|

| allow & use presence of i,j dots | X | - | X | X

|

| allow & use presence of deferred t bars | X | X | X | -

|

| Dictionary

|

|---|

| on-line adaptable | - | - | - | X

|

| tested max. size (kWords) | 70 | 40 | 10 | 10

|

| use of lexicon in recognition | post | embedded | post | post

|

| System

|

|---|

| progr. language | C | C | C | C

|

| OS | Unix,DOS | Unix,VMS,DOS | Unix | Unix

|

| memory footprint, inc. dictionary | 0.5 MB | 250 kB | 1 MB | 2.5 MB

|

After the Esprit review, the conclusion for our NICI recognizer was that

we definitely needed to add code for the deferred t-bar crossing,

and that handling isolated characters as a special case would

pay off. Before that, we had treated all script styles as chains of

strokes. In case of isolated handprint, however, there may be "rubble"

strokes between the characters, due to pen switching or all kinds of

embellishing ligatures which are not present in connected cursive.

Undoubtedly the other project partners had their own learning moments on

the basis of the review process.

Other historical notes

During this period, the Paragraph company sold its recognizer

for on-line cursive words to several companies (Apple and others).

Their system was also rule based, using structural features. One of

its virtues was the fact that it could handle upper- and lowercase

cursive characters within words (we could not, yet). In 1993,

Goldberg & Richardson introduced their stylized unistroke alphabet

at the InterCHI'93, Amsterdam. If the audience would have possessed

tomatos, they almost certainly would have used these. We, as pattern

recognizers considered unistroke classification as a problem for 1st-year

university students (in contrast to cursive-word recognition).

The HCI audience was appalled by the idea that users should adapt their

writing behavior to the machine. In the end we were all proven wrong, as

the Graffiti version of the Goldberg & Richardson alphabet became a

huge success on Palm-Pilot organizers and the likes.

Today: the automatic recognition of on-line contemporary script

and off-line historical script remains a tremendous challenge

in science and technology research.

schomaker@ai.rug.nl