Separability versus prototypicality in handwritten word-image

retrieval (2013).

Jean-Paul van Oosten, Lambert Schomaker

Pattern Recognition 47.3

Available online 20 September 2013

Department of Artificial Intelligence, University of Groningen,

PO Box 407, 9700 AK, Groningen, The Netherlands

DOI: 10.1016/j.patcog.2013.09.006

ScienceDirect

The context of the current study is the development of a search engine for historical handwritten documents, Monk.

We need a massive amount of labeled handwritten word images to bootstrap this system.

So, one selects a shape feature, trains a support-vector machine on a word class, and uses k-fold evaluation to choose the best classifier, all according to best practices. And the Recall and Precision look very good! However, the actual retrieval results on a dataset of realistic size appeared to be very disappointing!

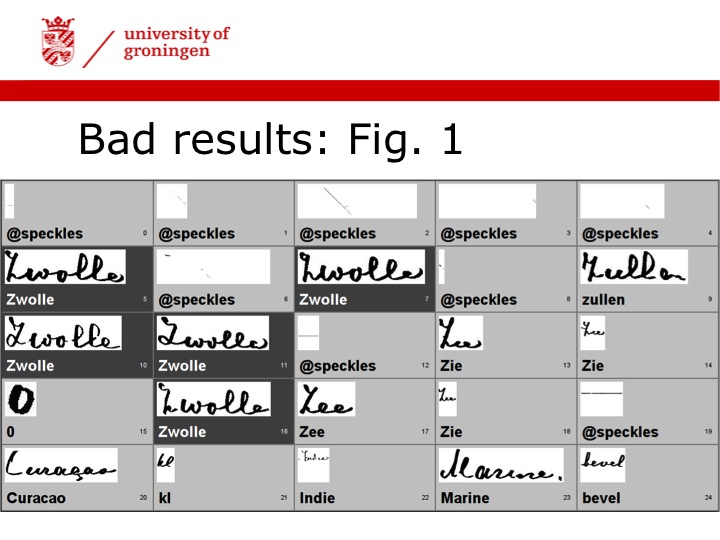

In Figure One we see the top of the hit list for the Dutch word ‘Zwolle’.

The instances are sorted on the basis of the distance from the SVM's margin. The hit list contains quite a number of counter-intuitive hits.

Why? How is this possible: a Good classifier; but Bad ranking?

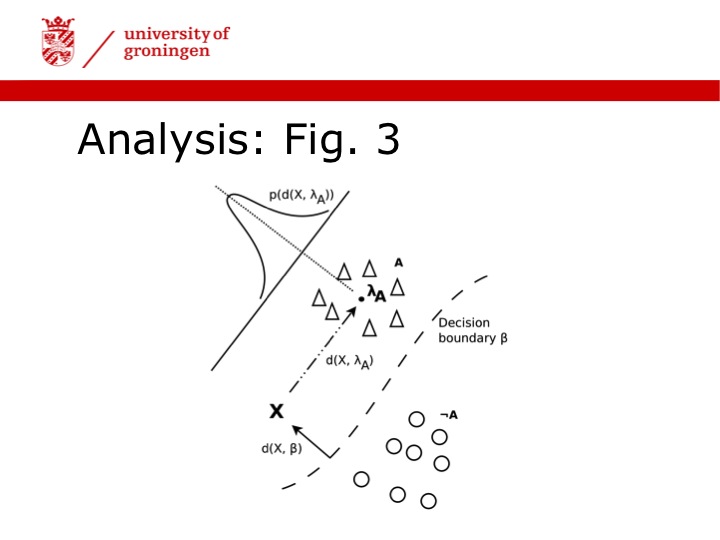

The support vector machine is a discriminative classifier and optimised for classification. The class of an unknown sample is decided by determining on which side of the decision boundary the sample falls. However, a sample that has a large distance from this boundary is not necessarily a very prototypical example. In other words, it might have a large distance to the prototype of the class.

This is the distinction between Separability and Prototypicality: Separability is the ability to separate class instances from non-class instances, something the SVM is very capable of. Prototypicality is the similarity of a pattern to its canonical prototype.

The hypothesis: Both separability and prototypicality need to be optimised. A separation-based heuristic is apparently not enough.

This is all very interesting but I first want to come back to what you are doing.

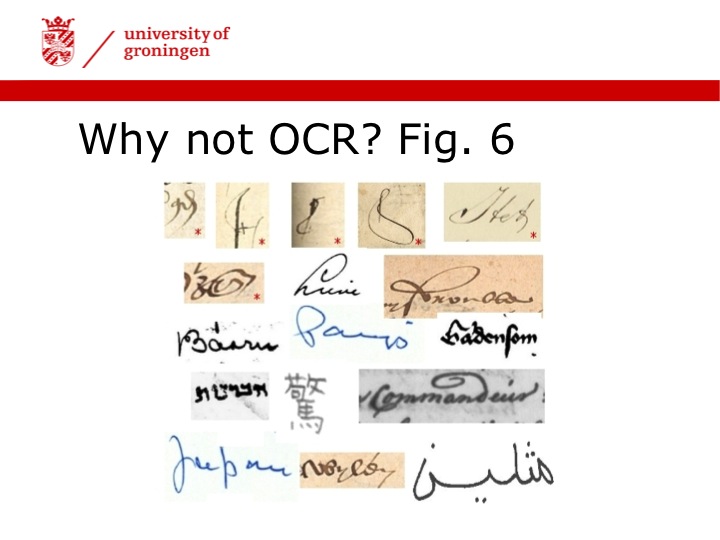

Why do you focus on whole words as the object of recognition? Why don't you use Optical Character Recognition, with a sliding window and maybe a hidden Markov model? Furthermore, you make no use of linguistic context.

In Fig. 6, we show example images illustrating why OCR is very difficult in handwritten word recognition. There are a lot of contractions, suggested characters that are completely missing from the image! In some scripts, there may be curls that are from 'future' letters, which makes the sliding window method vulnerable.

Regarding context: Current computational language models are trained on millions of text files. This is not feasible for many historical document collections, because there is not enough encoded text yet.

Indeed, we start from scratch with document images from an unknown script style, with an unknown language, with unknown content. In bootstrapping, everything has to be learned from scratch.

Therefore we focus on convenient methods and use an object that has a redundant shape: THE WORD. Additional methods can be applied later, when enough data has been collected.

Let's get back to the basic tenet of this paper. So, you say that two functions need to be optimized: separability and prototypicality: One for finding the needle in the haystack, the most likely word class and one for optimal, intuitive ranking, with the nice examples at the top.

How are you going to test whether this really works?

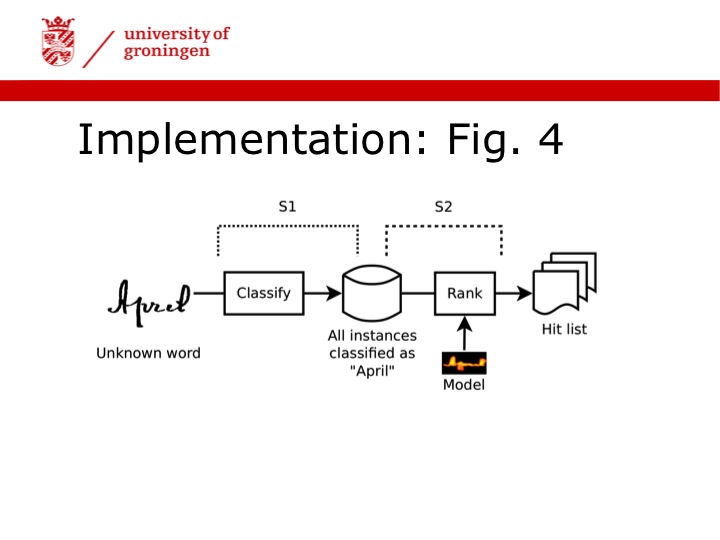

We implement the system as described in Fig. 4: Classify an image first, as being a word from the lexicon of all possible words. Second, rank the found instances, using another feature method, that is better suited for intuitive ranking.

We are going to execute a number of experiments, varying complexity and order of the steps. The performance criteria are Accuracy, Precision, Recall and Edit distance. The edit distance is used as a measure for intuitiveness of the hit list.

So what are the results?

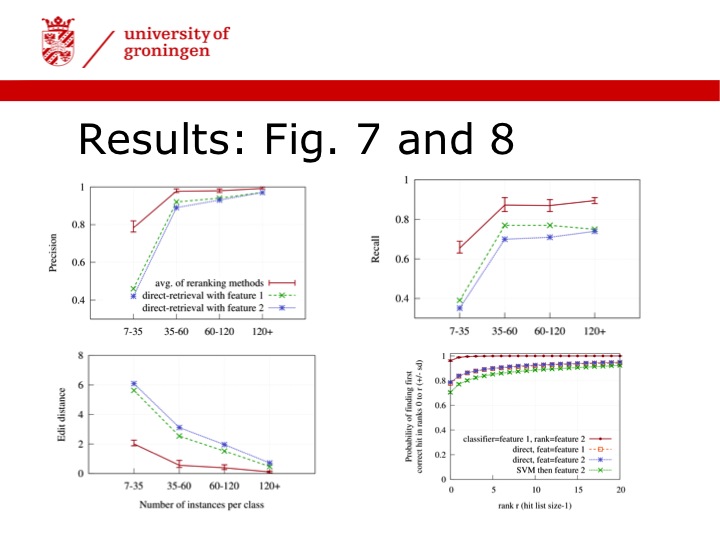

The results are shown in Fig. 7 and 8. First, we see that a dual-step method largely out-performs the single-step SVM approach.

Second, notably, the edit distance shows the large benefit of ranking in the second stage, boosting the intuitiveness of a hit list.

Third, the precision tends to converge between the methods if the number of training examples is large. Therefore, the benefits of the dual-step approach are most evident in the early stage of bootstrapping.

Conclusion:

We have shown that a method that performs well in classification does not by necessity yield good ranking in retrieval. However, by applying two processing stages, we can optimise both separability and prototypicality —that is a proper ranking— in the second step.

This approach has been implemented in the large-scale Monk search engine, considerably facilitating the human labeling of words, especially in the beginning of the training-cycle.

The iterated application of the dual step approach, which is of course taking place in the daily procedures of the Monk system, reminds us of the Fahrkunst elevator in ancient mines, which uses an alternation of steps to get at a higher level.